Today I want to share an example of a simple optimization I’ve recently made for multiple reports I’ve had to generate. It basically boils down to moving work to your SQL server (your SQL server is awesome at crunching huge amounts of data).

Here’s the full story:

For four years now I’ve been developing the Festivalmanager - a web based management software used for internal attendances at the Wacken Open Air Festival.

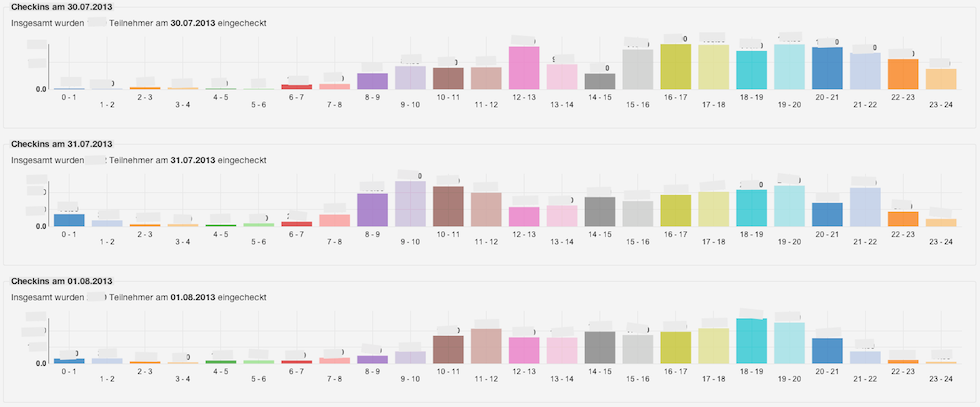

The application has multiple views displaying aggregate informations, e.g. the number of attendances checked in per hour and per day, for every day the festival is running.

The data for the above chart was original generated using this ruby snippet:

require 'date'

today = Date.today

# imagine we are interested in the past 10 days worth of data

days_in_timespan = (Date.new(today.year, today.month, today.day - 10)..today)

days_in_timespan.each do |date|

23.times do |hour|

from_date = Date.new(date.year, date.month, date.day, hour, 0)

to_date = Date.new(date.year, date.month, date.day, hour + 1, 0)

sql = <<-SQL

SELECT count(*)

FROM events

WHERE

events.created_at > '#{from_date.strftime('%Y-%m-%d %H:%M')}'

AND events.created_at < '#{to_date.strftime('%Y-%m-%d %H:%M')}'

SQL

events_on_day_and_hour = execute(sql)

end

end

Now this gives us correct results. But over the years, as the dataset continued to grow the report became slow to generate.

The reason for this was the total number of SQL queries executed -

While every single SQL query was blazing fast, they all require a roundtrip to the database server and back.

By moving the calculation of events per hour per day to the SQL server thus reducing the total number of SQL queries executed I was able to reduce the time to generate the above report from ~30 seconds to < 150ms (that’s an impressive speedup of nearly 200%!).

See the updated SQL query below:

WITH data (count, hour) AS (

SELECT

count(*) AS count,

extract(hour FROM created_at) AS hour

FROM events

WHERE

created_at::date = '#{date.strftime('%Y-%m-%d')}'::date

GROUP BY hour

)

SELECT

coalesce(data.count, 0) AS count,

coalesce(data.hour, series.hour) AS hour

FROM data

RIGHT OUTER JOIN

(SELECT 0, * FROM generate_series(0,23) AS hour) AS series

ON

series.hour = data.hour;

As you can see I’m using PostgreSQL here along with Common Table Expressions to fetch the number of events of every hour of a single day. Because there might be timespans without data points I’ve had to right outer join the result set with a generated_series which only contains zero values.

Note that I’d always start with the first approach. But when the need arises it’s nice to know that there’s always plenty of room to speed things up :)

UPDATE After reading the last Postgres Weekly I’ve adjusted the query - while the original speed stayed the same it’s much more understandable now:

SELECT

extract(hour from hour) as hour, count(*) as count

FROM

generate_series('#{date.strftime("%Y-%m-%d")} 00:00'::timestamp, '#{date.strftime("%Y-%m-%d")} 23:00'::timestamp, '1 hour') AS hour

LEFT JOIN events

ON created_at::date = '2013-08-03'::date AND date_trunc('hour', created_at) = hour

GROUP BY 1

ORDER BY hour;