Over the holidays I decided to get ride of the Slack Desktop application, one very RAM hungry application I’m using constantly. Moving to the browser client is no option as I have way too many tabs open in all browsers you could imagine. As I’m constantly using the terminal I decided to replace the Slack Desktop application with a command-line interface IRC client, irssi.

Now irssi works great with Slack via Slacks IRC gateway, but I wanted to receive messages while I was offline, too. To my best knowledge this is only possible when running an IRC bouncer.

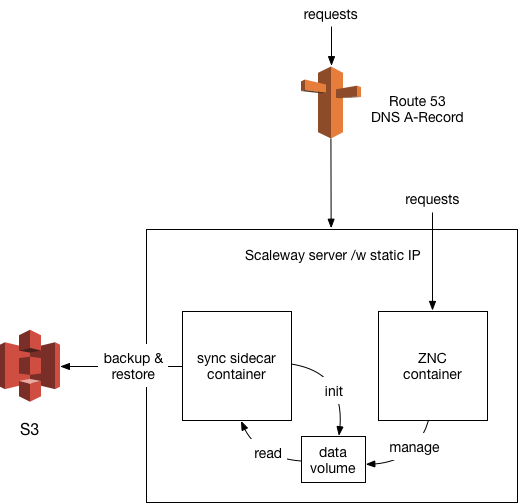

In this blog post I will give a top level overview on how to run ZNC, an IRC bouncer, on Scaleway, exposing the bouncer via Amazon Route 53. All in all this should cost you 5€ per month.

Overview

We’ll make ZNC publicly accessible under a subdomain, managed by AWS Route 53. The subdomain for ZNC will be pointing to a static Scaleway IP, which we’ll attach to a Scaleway server instance.

Using a static IP for this allows us to cycle the underlying instance any time, without requiring changes on the AWS Route 53 side.

The Scaleway server will be provisioned to run two containers - one of ZNC itself, and a sidecar which takes care of synchronizing data from and to AWS S3 for durable storage.

Note that the choice of AWS S3 for durable storage is an implementation detail that can easily be changed by adjusting the sidecar.

ZNC container

ZNC is not yet available as a container image. There’s an unmerged PR which works just fine. I’ve published an image on Dockerhub, which is based off of that PR and can be re-build in 6 steps:

$ git clone https://github.com/znc/znc.git /tmp/znc

$ curl -L -o /tmp/znc/Dockerfile https://raw.githubusercontent.com/torarnv/znc/ed457d889db012c645557bd3cb494139807486e8/Dockerfile

$ cd /tmp/znc

$ git submodule update --init --recursive

$ docker build -t znc:$(git rev-parse HEAD) .

The image I’ve build is based off of alpine:3.4 and the znc data directory is changed to be /opt/znc instead.

sidecar container

The synchronization sidecar ensures that the ZNC configuration as well as all logs end up in AWS S3. If no local data exists it also takes care of restoring data. This ensures that we end up with a working setup when we cycle the server instance, since the data from S3 will be used as seed for the new instance.

The container itself only contains rclone and runs the synchronization periodically via crond,

as well as on startup. The script executing the synchronization is just a couple of lines:

# s3-sync.sh

export LOCAL_PATH=/mnt/data

echo Running S3 Sync

if [ "$(ls -A $LOCAL_PATH)" == "" ]; then

echo "overwriting local."

rclone sync remote:$S3_REMOTE_PATH $LOCAL_PATH

else

echo "overwriting remote."

rclone sync $LOCAL_PATH remote:$S3_REMOTE_PATH

fi

echo S3 Sync is done

rclone requires a configuration file to be present. As we’re fetching AWS credentials from the environment this file is empty except for the AWS region we’re using:

# .rclone.conf

[remote]

type = s3

env_auth = 1

access_key_id =

secret_access_key =

region = eu-central-1

endpoint =

location_constraint = EU

Automation

The entire setup is automated via Hashicorps terraform. I’ve published the entire setup on github as well. The setup consists of three small modules which take care of everything you need:

s3, which creates an S3 bucket as well as an IAM used with required permissions to read and write data to the s3 bucket.znc, which creates a static IP and server on Scaleway, and sets up the instance properly by creating systemd units to manage the previously created images.r53, which takes the static ip output of thezncimage and sets up a new AWS route53 subdomain

provider "aws" {}

variable "bucket_name" {}

module "s3" {

source = "./modules/s3"

s3_bucket_name = "${var.bucket_name}"

}

provider "scaleway" {

region = "ams1"

}

variable "znc_container_image" {

default = "nicolai86/znc:b4b085dc2db69b58f2ad3bb4271ff3789e8301b5"

}

variable "sync_container_image" {

default = "nicolai86/rclone-sync:v0.1.4"

}

module "znc" {

source = "./modules/znc"

aws_access_key_id = "${module.s3.aws_access_key_id}"

aws_secret_access_key = "${module.s3.aws_secret_access_key}"

aws_s3_bucket_name = "${module.s3.aws_s3_bucket_name}"

znc_container_image = "${var.znc_container_image}"

sync_container_image = "${var.sync_container_image}"

}

variable "hosted_zone_id" {}

variable "hostname" {}

module "r53" {

source = "./modules/r53"

hosted_zone_id = "${var.hosted_zone_id}"

service_ip = "${module.znc.ip}"

hostname = "${var.hostname}"

}

closing thoughts

Using containers to deploy ZNC has the nice upside of being able to easily add native modules

into the buildstep. All you need to do is to copy the source code of the module into the modules folder.

The Scaleway server instance has a deliberatly minimal configuration as I’d like to move the entire setup onto an orchestration system like kubernetes someday. Using a sidecar container is a preparation for this, as it allows me to move the entire setup over without big adjustments. Operationally speaking a move to k8s would also improve many blind spots of this setup: no self-healing, deployments with downtime, monitoring, …

Since I’m using AWS S3 as backup medium it’s very easy to configure the entire setup locally, synchronize the data back to S3, and then create the production setup using terraform: all data is fetched from S3 and things continue running.

One thing that I still need to address is a publicly valid SSL certificate, which requires some letsencrypt integration. As this can very easily be integrated into a k8s cluster it’s left out for now: the setup runs on a self signed certificate. Another thing which needs adjustments is the synchronization: in the worst case I’ll lose 15 minutes worth of data, when the server crashes and nothing can be restored.