I often think Hashicorp could do a better job at establishing good patterns for creating identical environments from a single source of truth with terraform and terraform cloud. This is my take on building a really solid infrastructure continuous deployment setup with terraform and terraform cloud. Here is what I want to achieve:

- full end-to-end automation. After a pull request is merged no human interaction should be required to promote changes to all environments. Local apply for terraform is a non-starter, as is local state. (Why does terraform even support this?)

- a single source of truth for all environments. Having to update multiple repositories, or multiple folders with an identical change is a non-starter.

- retain the possibility for emergency actions like reverts or jumping environments

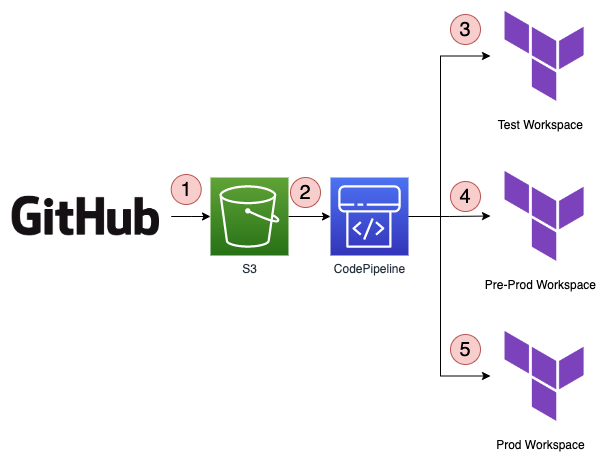

Let’s say we have three environments which we want to provision with terraform: Test, Pre-Prod and Prod. The basic structure for a small CD setup looks like this:

example CD system with AWS CodePipeline and terraform cloud

This is how it works in detail:

- GitHub pushes an artifact to S3 on merge to your main branch. This is required to trigger AWS CodePipeline

- AWS CodePipeline starts a new execution. The pipeline is configured to deploy Test, Pre-Prod, Prod workspaces in order

- CodePipeline performs an API-driven deployment of the Test workspace

- after the Test workspace completes CodePipeline continues to Pre-Prod

- after the Pre-Prod workspace completes CodePipeline continues to Prod

I’m using AWS CodePipeline as an example here; any continuous deployment system that supports custom actions and validations should work.

Some desireable properties of this setup:

- we have a single sourth of truth for all environments which we expect to be identical

- changes are automatically promoted through out all environments

- all execution is remote via terraform cloud, all state is remote in individual workspaces. No local credentials.

- variables are captured in terraform cloud workspace

- we can pause deployment in individual stages in case of failures/ emergencies

- we can skip stages by running terraforms api-driven workflow locally in case of emergencies

This is a stark contrast to either having multiple folders or having multiple repositories, each for one environment.

Using a continuous delivery system for your infrastructure automation opens up plenty of possibilities to gate deployments:

- only deploy during business hours, on work days and not weekends or statutory holidays

- add (manual) approval steps, if required

- add end-to-end infrastructure validation; ie by running canary tests against the managed system

Lastly, you can also build deployment workflows that monitor ie AWS CloudWatch alarms as you deploy, and bail out and revert if your infrastructure deployment causes issues. If you’ve ever used AWS CloudFormation with AWS CodePipeline this should sound familiar: this is pretty much a similar setup as AWS offers you out of the box, but with terraform cloud as execution engine and state store.

Now, using terraform cloud API-driven workflow comes with some downsides as well:

- you need to build your own GitHub/ Gitlab integration, ie to execute speculative planning on pull requests.

- you need to build and maintain your own terraform cloud integration, ie with AWS CodePipeline, AWS Step Functions and AWS Lambda.

- you need to give terraform cloud administrative access to your AWS accounts, unless you are using self hosted terraform cloud agents.

Now, let’s look into more details at the different ways you can build a terraform cloud api-driven integration with AWS:

- You can use AWS CodeBuild. Writing a buildspec is straight forward, but you’ll need to roll your own emergency apply script.

- You can write a custom AWS Lambda action. This takes a little more effort; however you can apply good engineering practices by writing tests and also build your lambda in a way that you get a CLI as well, which you can use for emergency operations

- You can build an AWS Step Functions workflow. The most involved option, but it allows you to handle more complex scenarios like automatic revert to last known good configuration versions automatically.

Personally I wish hashicorp would be more prescriptive for environment management. This would prevent small startups and companies to build monolithic workspaces, or monolithic modules with local state, and save a ton of work down the road to refactor your way into a manageable situation.

Now I’ll side step the discussion on how to connect multiple terraform cloud workspaces, or how to split workspaces into modules etc. Something for a future post.